Open DataQoS as Code

Data QoS as Code represents a groundbreaking shift in data management, merging the principles of Data Quality and Service-Level Agreements into an integrated framework. This approach leverages the concepts of network Quality of Service (QoS) for monitoring data service performance metrics such as packet loss, throughput, and availability. By adopting the Everything as Code philosophy, Data QoS as Code introduces a method for automating, scaling, and securing data monitoring and management. It utilizes a vendor-neutral, YAML-based specification to facilitate this, offering a streamlined and efficient solution for handling complex data quality and service-level requirements.

Specification aims:

- Define DataQoS with YAML as a machine-readable vendor-neutral open specification

- Define data quality and service quality with 19 indicators as a holistic yet flexible reusable component

- Extend the DataQoS concept with Everything as Code to enable monitoring and define the business-driven threshold requirements

Note! 'Open' refers to the openness of the standard. Any kind of connotations to open data (a different thing) are not intentional, intended, or desirable.

Experimental development version

The key words “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “NOT RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in BCP 14 [RFC2119] [RFC8174] when, and only when, they appear in all capitals, as shown here.

The specification is shared under Attribution-ShareAlike 4.0 International (CC BY-SA 4.0) license. Maintainer and igniter Jarkko Moilanen.

Background in a larger research project

This experimental specification is part of larger PhD research. About the research and news regarding Open DataQoS as Code can be found from Medium publication "Exploring the Frontier of Data Products".

VERSION DETAILS

Version source:

Editors:

Participate:

Introduction

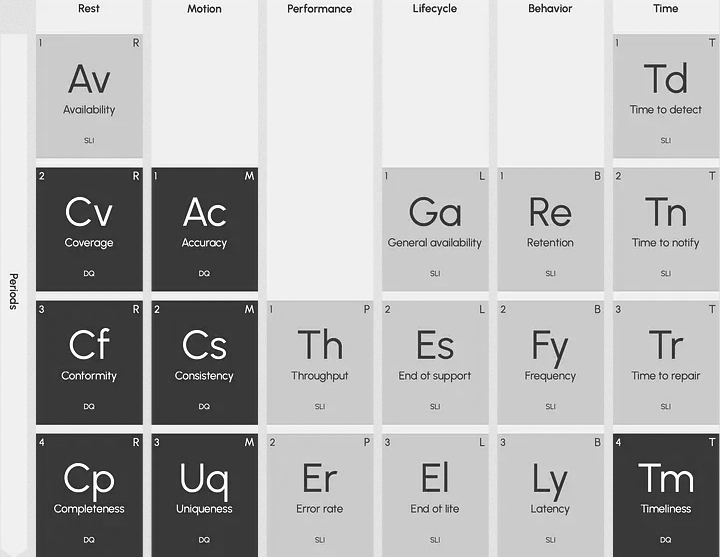

Data Quality of Service (Data QoS) is a concept merging Data Quality (DQ) with Service-Level Agreements (SLA) created by Jean-George Perrin. It draws parallels from Quality of Service (QoS) in network engineering, which measures service performance. QoS criteria include packet loss, throughput, and availability. Data QoS addresses the complexity of measuring data attributes as businesses evolve. Inspired by Mandeleev’s periodic table, the author of Data QoS proposes combining Data Quality and SLA elements into a unified framework for better data observation. This approach aims to simplify data management amid growing business needs.

Source: What is Data QoS, and why is it critical?".

Open Data Quality of Service as Code (Open Data QoS as Code) in built upon the above concept and adds Everything as Code philosophy in it by adding "as code" aspect to it in order to enable monitoring. "Everything as Code" (EaC) is a development practice that extends the principles of version control, testing, and deployment, traditionally applied to software development, to all aspects of IT operations and infrastructure. This approach treats manual processes and resources—such as infrastructure provisioning, configuration, network setup, and security policies—as code. By doing so, it enhances repeatability, scalability, and security across the entire IT landscape. EaC allows for the automation of complex systems, making it easier to replicate environments for development, testing, and production purposes. It signifies a shift towards a more systematic, software-defined management of IT resources, aiming to improve efficiency, reduce errors, and increase the speed of deployment and operational task.

More information about Open Data QoS as Code

This experimental specification of Open DataQoS as Code is part of larger PhD research. More information about the research and news regarding Open DataQoS as Code can be found from Medium publication "Exploring the Frontier of Data Products".

If you see something missing, described inaccurately or plain wrong, or you want to comment the specification, raise an issue in Github

Document structure

LEFT COLUMN: Navigation

The left column is navigation which enables fluent and easy movement around the specification.

MIDDLE COLUMN: Principles and components

The middle column contains detailed information about the included components and related options. This is the theory part.

Note! Mandatory elements and attributes are listed separately in the definition tables. This enables user to construct minimum viable specification more easily and fast. https://schema.org provided ready-made definitions are applied when ever possible instead of re-inventing the wheel.

RIGHT COLUMN: Examples

The right column contains YAML formatted examples of how the specification is used. In the future other output formats are added on request basis. YAML can easily be converted to JSON if needed.

Example of YAML formatted snippet from the Open Data Product Specification:

monitoring:

url: https://monitoring.com

Data QoS

Data Quality of Service Object contains attributes which define the desired and promised quality of the data product from data quality and service quality point of view.

The Object follows Data QoS model created by Jean-Georges Perrin. Data QoS contains 19 indicators. "As code" refers to Everything as Code which is the practice of treating all parts of the system as code. Each indicator is an object that has both threshold values set by the business and "as code" spec to monitor and verify wanted quality level.

In this trial mandatory stucture elements are not defined and everyhing is marked as optional including the DataQoS object. Should it be mandatory and to what extend is a good questions and is another excercise.

Usage guidance

In final version 1.0 you choose the indicators from 19 options which matches your needs. In this experimental version just 4 indicators have been defined to exemplify the structure and schemas used.

It is not expected that all use cases require 19 indicators or even that all use cases require both service and data quality indicators to be used. In the application of the Open DataQoS as Code standard, users select which indicators to use.

- Some might use it only to define threshold values and monitoring rules for Data Quality (Data Quality Focus)

- Others use just the Service Quality aspect of it. (Service Quality Focus)

- In some use cases some of both aspects are applied by including data quality and service quality indicators in the specifications. (Mixed Focus)

You can access details of each indicator from the navigation. In the provided example you can see two indicators used (conformity and error rate).

Optional attributes and elements

Example of DataQoS component usage:

DataQoS:

conformity:

description: ""

monitoring:

type: SodaCL

objectives:

- displayName: Conformity

target: 90

spec:

- gender: matches("^(Male|Female|Other)$")

- age_band: matches("^\\d{2}-\\d{2}$") # Assuming age bands are in the format 20-29, 30-39, etc.

errorRate:

description: ""

monitoring:

type: OpenSLO

spec: # inside the spec we use OpenSLO standard, https://github.com/openslo/openslo

objectives:

- displayName: Total Errors

target: 0.98

ratioMetric:

counter: true

good:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: sum(localhost_server_requests{code=~"2xx|3xx",host="*",instance="127.0.0.1:9090"})

total:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: localhost_server_requests{code="total",host="*",instance="127.0.0.1:9090"}

Indicator name |

Type | Options | Description |

|---|---|---|---|

| DataQoS | element | - | Binds the Data QoS related elements and attributes together |

Additional common unified fields used in 19 indicators

Common unified fields |

Type | Options | Description |

|---|---|---|---|

| description | string | max length 256 chars | Brief description of the indicator |

| objectives | array | array | Contains both name and target value. See example. Formatting of objectives is defined in OpenSLO. |

| monitoring | element | - | Binds together "as code" description of the DataQos indicator. Every indicator has monitoring part as well. |

| type | string | max length 50 chars | Value indicates the used system or standard. For example DataDog, OpenSLO, SodaCL, Montecarlo, and custom. Helps in identifying what to expect in actual spec content |

| spec | string | - | The indicator spec can be encoded as a string or as inline YAML. Formerting of this depends of the type selected |

If you see something missing, described inaccurately or plain wrong, or you want to comment the specification, raise an issue in Github

Availability

The availability of the service/data. Use common SLA apprach to define percentage of guaranteed availability

Components

Example of availability indicator usage

DataQoS:

availability:

description: ""

monitoring:

type: OpenSLO

spec: # inside the spec we use OpenSLO standard, https://github.com/openslo/openslo

objectives:

- displayName: Availability

target: 0.98

ratioMetric:

counter: true

good:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: sum(localhost_server_requests{code=~"2xx|3xx",host="*",instance="127.0.0.1:9090"})

total:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: localhost_server_requests{code="total",host="*",instance="127.0.0.1:9090"}

Component name |

Type | Options | Description |

|---|---|---|---|

| availability | element | - | Binds together availability indicator description with objectives and monitoring. Follows OpenSLO standard model. |

| description | attribute | string | Short description to be used in displying more detailed information for consumers and operations staff. |

| monitoring | object | - | Binds together both monitoring and objectives (threshold values) structure |

| type | attribute | string | Defines the standard used in describing the monitoring object contant. Call also be vendor specific such as MonteCarlo and SodaCL. Details in type definition (link) |

| spec | object | - | Inside this object you write the type specified description or objectives and monitoring as code. |

| objectives | array | - | Define the objectives (threshold values) for expected quality of this indicator. |

Conformity

Data content must align with required standards, syntax (format, type, range), or permissible domain values. Conformity assesses how closely data adheres to standards, whether internal, external, or industry-wide.

Components

Example of conformity indicator usage

DataQoS:

conformity:

description: ""

monitoring:

type: SodaCL

objectives:

- displayName: Conformity

target: 90

spec:

- gender: matches("^(Male|Female|Other)$")

- age_band: matches("^\\d{2}-\\d{2}$") # Assuming age bands are in the format 20-29, 30-39, etc.

Component name |

Type | Options | Description |

|---|---|---|---|

| conformity | element | - | .... |

Completeness

Data content must align with required standards, syntax (format, type, range), or permissible domain values. Conformity assesses how closely data adheres to standards, whether internal, external, or industry-wide.

Components

Example of completeness indicator usage

DataQoS:

completeness:

description: ""

monitoring:

type: SodaCL

objectives:

- displayName: Completeness

target: 98

spec:

- for each column:

name: [member_id, gender, age_band]

checks:

- not null:

fail: when > 2% # Fail if more than 2% of records are null

Component name |

Type | Options | Description |

|---|---|---|---|

| completeness | element | - | .... |

Error rate

Use OpenSLO standard in the object to define rules in the spec. How often will your data have errors, and over what period? What is your tolerance for those errors?

Components

Example of error rate indicator usage

DataQoS:

errorRate:

description: ""

monitoring:

type: OpenSLO

spec: # inside the spec we use OpenSLO standard, https://github.com/openslo/openslo

objectives:

- displayName: Total Errors

target: 0.98

ratioMetric:

counter: true

good:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: sum(localhost_server_requests{code=~"2xx|3xx",host="*",instance="127.0.0.1:9090"})

total:

metricSource:

type: Prometheus

metricSourceRef: prometheus-datasource

spec:

query: localhost_server_requests{code="total",host="*",instance="127.0.0.1:9090"}

Component name |

Type | Options | Description |

|---|---|---|---|

| errorRate | element | - | .... |

TBD

Indicators to be added to the specification

Indicator name |

Type | Options | Description |

|---|---|---|---|

| coverage | element | - | All records are contained in a data store or data source. Coverage relates to the extent and availability of data present but absent from a dataset. |

| accuracy | element | - | The measurement of the veracity of data to its authoritative source: the data is provided but incorrect. Accuracy refers to how precise data is, and it can be assessed by comparing it to the original documents and trusted sources or confirming it against business spec. |

| consistency | element | - | Data should retain consistent content across data stores. Consistency ensures that data values, formats, and definitions in one group match those in another group. |

| uniqueness | element | - | How much data can be duplicated? It supports the idea that no record or attribute is recorded more than once. Uniqueness means each record and attribute should be one-of-a-kind, aiming for a single, unique data entry |

| throughPut | element | - | Throughput is about how fast I can access the data. It can measured in bytes or records by unit of time. |

| retention | element | - | How long are we keeping the records and documents? There is nothing extraordinary here, as with most service-level indicators, it can vary by use case and legal constraints. |

| frequency | element | - | How often is your data updated? Daily? Weekly? Monthly? A linked indicator to this frequency is the time of availability, which applies well to daily batch updates. |

| latency | element | - | Measures the time between the production of the data and its availability for consumption. |

| timeToDetect | element | - | How fast can you detect a problem? How fast do you guarantee the detection of the problem? |

| timeToNotify | element | - | Once you see a problem, how much time do you need to notify your users? |

| timeToRepair | element | - | How long do you need to fix the issue once it is detected? |

| timeliness | element | - | The data must represent current conditions; the data is available and can be used when needed. Timeliness gauges how well data reflects current market/business conditions and its availability when needed |

Hello world example

You'll find a complete machine-readbale example of a data product from the right column. It is imaginary data product Pets of the year which contains derived data about the most common pets in the world. The product has 4 pricing plans which are mostly based on recurring subscription model. Note! Not all voluntary attributes are used in the example and multilingualism has not been fully applied.

Example of complete working Data Product specification instance:

---

schema: https://raw.githubusercontent.com/Open-Data-Product-Initiative/open-data-product-spec-dev/ddbc069196a664d0e28a0f3dc7c1c7fb49b64591/source/schema/odps-dev-json-schema.json

version: dev

product:

en:

name: Pets of the year

productID: 123456are

valueProposition: Design a customised petstore using a data product that describes

pets with their habits, preferences and characteristics.

description: This is an example of a Petstore product.

productSeries: Lovely pets data products

visibility: private

status: draft

version: '0.1'

categories:

- pets

standards:

- ISO 24631-6

tags:

- pet

brandSlogan: Passion for the data monetization

type: derived data

logoURL: https://data-product-business.github.io/open-data-product-spec/images/logo-dps-ebd5a97d.png

OutputFileFormats:

- json

- xml

- csv

- zip

useCases:

- useCase:

useCaseTitle: Build attractive and lucrative petstore!

useCaseDescription: Use case description how succesfull petstore chain was

established in Abu Dhabi

useCaseURL: https://marketplace.com/usecase1

recommendedDataProducts:

- https://marketplace.com/dataproduct.json, https://marketplace.com/dataproduct-another.json

pricingPlans:

en:

- name: Premium subscription 1 year

priceCurrency: EUR

price: '50.00'

billingDuration: year

unit: recurring

maxTransactionQuantity: unlimited

offering:

- item 1

- name: Premium Package Monthly

priceCurrency: EUR

price: '5.00'

billingDuration: month

unit: recurring

maxTransactionQuantity: 10000

offering:

- item 1

- name: Freemium Package

priceCurrency: EUR

price: '0.00'

billingDuration: month

unit: recurring

maxTransactionQuantity: 1000

offering:

- item 1

- name: Revenue sharing

priceCurrency: percentage

price: '5.50'

billingDuration: month

unit: revenue-sharing

maxTransactionQuantity: 20000

offering:

- item 1

dataOps:

infrastructure:

platform: Azure

storageTechnology: Azure SQL

storageType: sql

containerTool: helm

format: yaml

schemaLocationURL: http://http://192.168.10.1/schemas/2016/petshopML-2.3/schema/petstore.xsd

scriptURL: http://192.168.10.1/rundatapipeline.yml

deploymentDocumentationURL: http://192.168.10.1/datapipeline

dataLineageTool: Collibra

dataLineageOutput: http://192.168.10.1/lineage.json

hashType: SHA-2

checksum: 7b7444ab8f5832e9ae8f54834782af995d0a83b4a1d77a75833eda7e19b4c921

dataAccess:

type: API

authenticationMethod: OAuth

specification: OAS

format: JSON

documentationURL: https://swagger.com/petstore.json

DataQoS:

availability:

description: Availability SLA level of the data product.

unit: integer

objective: 95

monitoring:

type: DataDog

format: yaml

rules:

- ....as code....

coverage:

description: ""

unit: percentage

objective: 100

monitoring:

type: SodaCL

format: yaml

rules:

- ....as code....

conformity:

description: ""

unit: percentage

objective: 100

monitoring:

type: SodaCL

format: yaml

rules:

- ....as code....

completeness:

description: ""

unit: percentage

objective: 90

monitoring:

type: SodaCL

format: yaml

rules:

- ....as code....

license:

scope:

definition: The purpose of this license is to determine the terms and conditions

applicable to the licensing of the data product, whereby Data Holder grants

Data User the right to use the data.

language: en-us

restrictions: Data User agrees not to, directly or indirectly, participate in

the unauthorized use, disclosure or conversion of any confidential information.

geographicalArea:

- EU

- US

permanent: false

exclusive: false

rights:

- Reproduction

- Display

- Distribution

- Adaptation

- Reselling

- Sublicensing

- Transferring

termination:

terminationConditions: Cancellation before 30 days. After the expiry of the

right of use, the product and its derivatives must be removed.

continuityConditions: Expired license will automatically continued without written

cancellation (termination) by Data Holder

governance:

ownership: Mindmote Oy, a company specializing in pet industry insights, owns

the license to its proprietary data product 'Pets of the Year'.

damages: During the term of license, except for the force majeure or the Data

Holders reasons, Data User is required to follow strictly in accordance with

the license. If Data User wants to terminate the license early, it needs to

pay a certain amount of liquidated damages.

confidentiality: Data User undertakes to maintain confidentiality as regards

all information of a technical (such as, by way of a non-limiting example,

drawings, tables, documentation, formulas and correspondence) and commercial

nature (including contractual conditions, prices, payment conditions) gained

during the performance of this license.

applicableLaws: This license shall be interpreted, construed and enforced in

accordance with the law of Finland, including Copyright Act 404/1961.

warranties: Data Holder makes no warranties, express or implied, guarantees

or conditions with respect to your use of the data product. To the extent

permitted under local law, Data Holder disclaims all liability for any damages

or losses, including direct, consequential, special, indirect, incidental

or punitive, resulting from Data User use of the data product.

audit: Data Holder will reasonably cooperate with Data Users by providing available

additional information about the data product. Both parties will bear their

own audit-related costs.

forceMajeure: Both parties may suspend their contractual obligations when fulfillment

becomes impossible or excessively costly due to unforeseeable events beyond

their control, such as strikes, fires, wars, and other force majeure events.

dataHolder:

taxID: 12243434-12

vatID: 12243434-12

businessDomain: Data Product Business

logoURL: https://mindmote.fi/logo.png

description: Digital Economy services and tools

URL: https://mindmote.fi

telephone: "+358 45 232 2323"

streetAddress: Koulukatu 1

postalCode: '33100'

addressRegion: Pirkanmaa

addressLocality: Tampere

addressCountry: Finland

aggregateRating: ''

ratingCount: 1245

slogan: ''

parentOrganization: ''

Editors and contributors

This specification is openly developed and a lot of the work comes from community. We list all community contributors as a sign of appreciation. The editor (and initial creator of the the specification) is Jarkko Moilanen. Editors take the feedback and draft new candidate releases, which may become the versions of the specification.